Our exploration of generative artificial intelligence (GenAI) started with a straightforward question: How can it help us create better learning experiences?

It’s the question we always ask ourselves as we look for ways to improve our solutions and help students succeed. To answer it for GenAI, we talked to educators. In the early months of 2024, we conducted a round of interviews with college faculty to hear their feedback on several ideas for new ways to use GenAI. One accounting professor lit up at the idea of a feature in our reading solution that could “explain it differently” for students.

This professor talked about begging students to come to office hours instead of emailing if they had not understood material in a lecture. “There is no one way to explain something,” the professor told us. “Some are gonna get it, some won't; you change a word and they'll get it.” The tool we were proposing would offer students “a way to say it two or three different ways until it clicks.”

This was a clearly appropriate and effective use of GenAI: Being able to highlight a section of text and ask for another explanation would keep students on track, hopefully increasing their engagement with the material (and hence their learning). And an interface that supports only text selection and a few carefully chosen buttons rather than an open-ended chat would help learners stay focused on their reading instead of going down rabbit holes with a GenAI chatbot.

From this idea came AI Reader, a tool that helps learners gain a deeper understanding of their course materials through alternative explanations, simplifications, and self-generated quizzes. The tool leverages McGraw Hill's trusted, high-quality content to ensure accuracy and reliability, as well as a sophisticated set of guardrails to ensure that all responses are safe and helpful.

How did we roll it out?

We began deploying AI Reader slowly in our eBooks, carefully evaluating how it was being used and making improvements. We started in fall 2024 in a few science and communication courses. Every time we added a new discipline, we first reviewed outputs from the tool with subject matter experts in that discipline to ensure that the AI-generated content was accurate, relevant, engaging, appropriate for the audience, and aligned with McGraw Hill’s content guidelines. In many cases, we revised our prompts for that discipline to improve AI response quality by incorporating pedagogical nuances suggested by the subject matter experts.

The optional tool is now embedded into 600+ titles in our McGraw Hill Connect solutions and expanding into other products across the McGraw Hill portfolio, like First Aid Forward for medical students.

How do we know it works?

Our value proposition is connected to learning outcomes, so we need to be rigorous with measurement to ensure that our tools are effectively improving the learning experience. We built AI Reader to keep students engaged with their reading, so a natural first research question is: Does higher student use of AI Reader lead to an increase in engagement with our learning solutions?

An easy approach would have been to contrast the amount of time that students who use AI Reader vs. those who don’t spend engaging with their course materials—and sure enough, when students use AI Reader, they spend up to twice as long with their course materials as when they don't use AI Reader. But we knew that this could simply be because students who spend more time studying are also more likely to leverage tools like AI Reader. We wanted to know how using AI Reader changed the study habits of students, so we had to get a bit more methodologically rigorous.

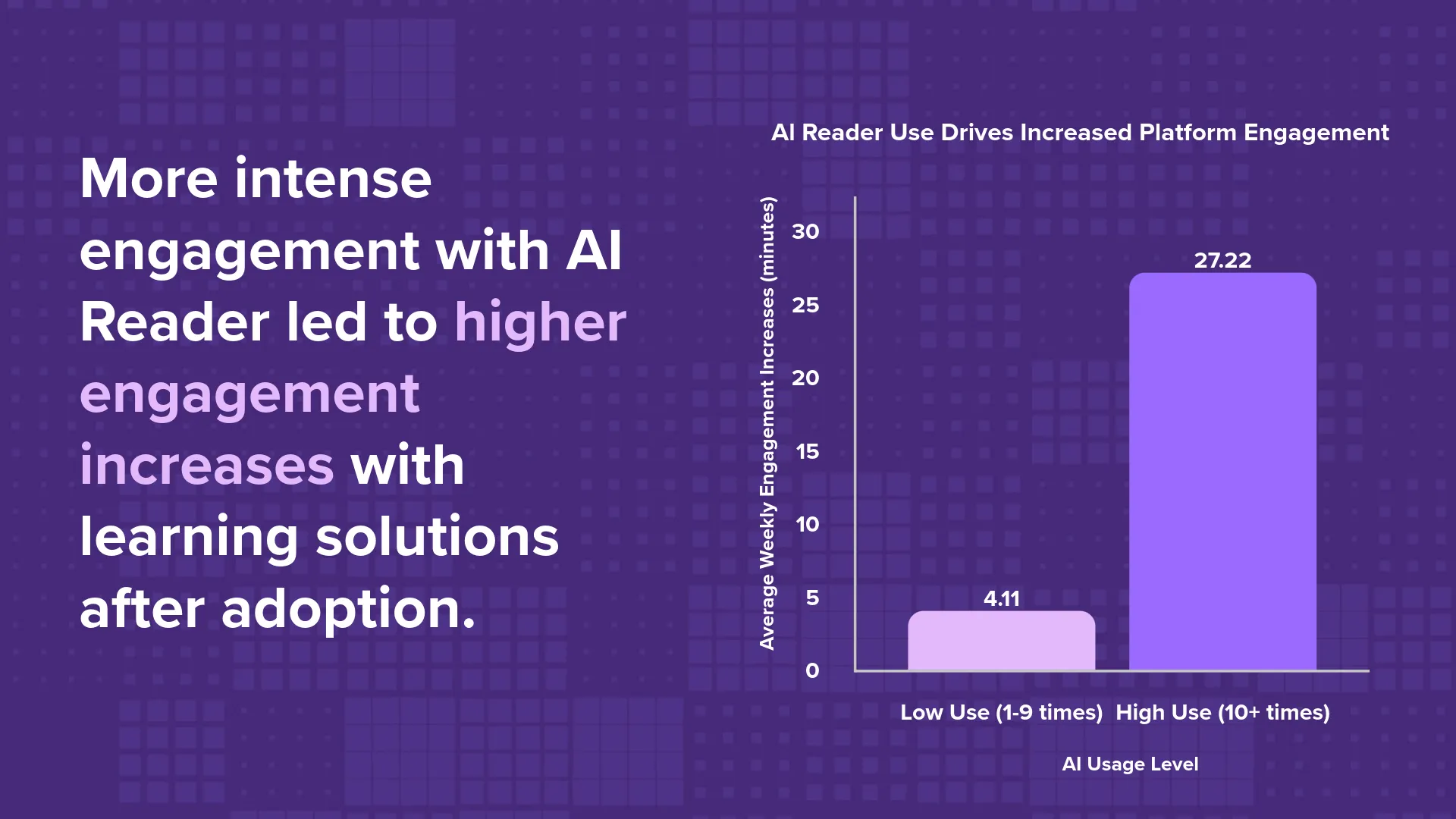

We conducted a quasi-experimental study, which is designed to measure cause and effect without randomly assigning learners into groups, that evaluated the behavior of 2,000+ anonymized students using three courses in which AI Reader was turned on mid-course (so we could compare the behavior of the same students before and after its introduction). Most of those students never engaged with AI Reader (and may not have known the tool existed); some students used AI Reader a few times; and our “power users” chose to use it at least 10 times. These students made different choices about how to study, including whether/how to use AI Reader, so to accurately measure the effects of AI Reader’s introduction on their behaviors we used a fancy mathematical model (mixed-effects difference-in-differences panel regression with inverse probability weights, for the stats experts reading this) to statistically “control for” both student characteristics and normal usage fluctuations over the course of a semester.

This analysis revealed a strong positive effect of the introduction of AI Reader on the engagement of both our low and high users of the tool, with greater positive effects for heavier users. We couldn't randomly assign students to use AI Reader or not (and use of the optional AI Reader tool is a choice for students to make), but because our analysis controlled for factors like students' pre-rollout engagement levels, unobserved individual differences, general weekly trends, and self-selection bias, we do have a reasonable scientific justification for claiming that AI Reader has increased these students’ engagement with their course materials.