Below are some of the key takeaways from our study:

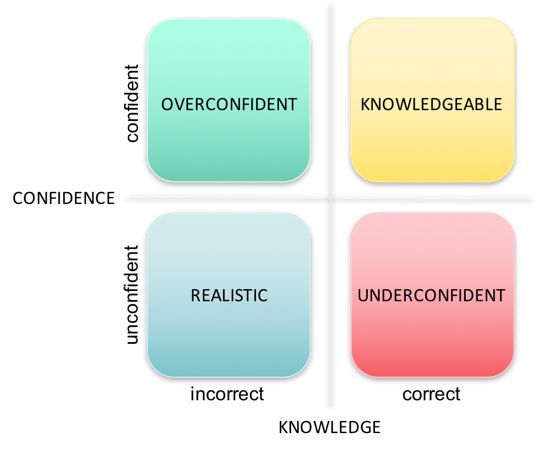

- Students’ perception of their abilities is asymmetric: they are rarely underconfident (under 1% of responses), but they are often overconfident in their knowledge (almost 20% of responses).

- Students’ perception of their confidence is correlated with their actual performance. However, more successful students also tend to be more overconfident. Overconfidence is not something that happens for the worst students, it’s something that happens for the best students.

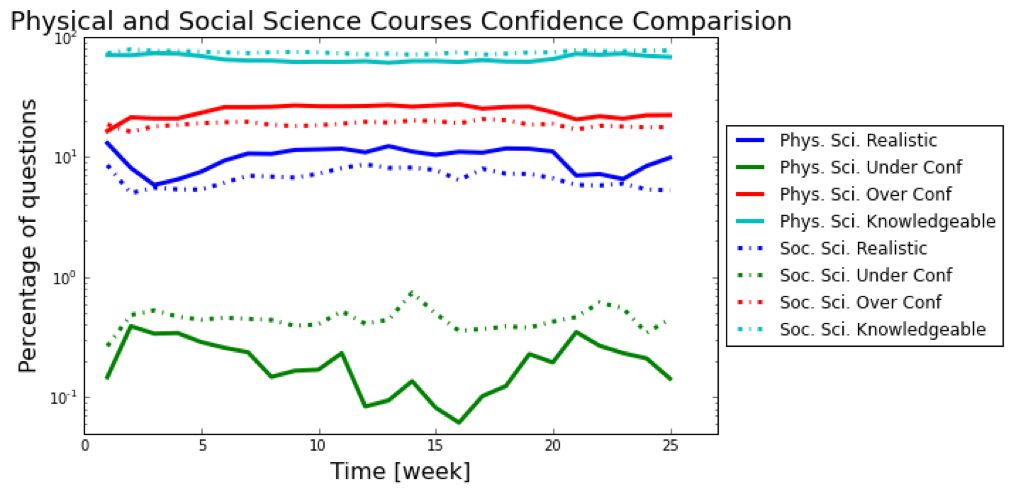

- Students tend to be significantly more overconfident when taking courses in the physical sciences than the social sciences. However, stronger students are more likely to be overconfident in the social sciences than in the physical sciences.

- While students’ confidence varies throughout courses, there is more variance in confidence in the first and last weeks of a course.

- Men are more likely to be overconfident than women, but both men and women adjust their confidence similarly based on feedback that they are wrong.

- Overall, students’ perception of their confidence is correlated with their actual performance.

These findings suggest that different students -- and students in different contexts -- need different amounts of support in developing appropriate levels of confidence. By understanding where overconfidence (and underconfidence) are particularly prevalent, we can determine if existing learning systems are inadvertently supporting some students less effectively than others.

These findings then feeds into our work to develop products which better differentiate instruction for different learners. In specific, we are building this research into our efforts to more accurately measure the degree to which a student’s confidence is stably too high or too low. Overall, by recognizing whether an individual student’s confidence is too high, too low, or just right -- we can drive learning supports that help students better know what they know.

References

Aghababyan, A., Lewkow, N., & Baker, R. (2017, March). Exploring the asymmetry of metacognition. In Proceedings of the Seventh International Learning Analytics & Knowledge Conference (pp. 115-119). ACM.

Aghababyan, A., Lewkow, N., Burns, S., & Suarez Garcia, J. (2018, March). Gender differences in confidence reports and in reactions to negative feedback within adaptive learning platforms. Poster presented at 8th International Conference on Learning Analytics & Knowledge. Sydney, Australia: ACM.

Barrick, M. R., Mount, M. K., & Judge, T. A. (2003). Personality and Performance at the Beginning of the New Millennium: What Do We Know and Where Do We Go Next? International Journal of Selection and Assessment, 9(1‐2), 9–30. https://doi.org/10.1111/1468-2389.00160

Poropat, A. E. (2009). A meta-analysis of the five-factor model of personality and academic performance. Psychological Bulletin, 135(2), 322–338. https://doi.org/10.1037/a0014996

Stiggins, R. J. (1999). Assessment, student confidence, and school success. The Phi Delta Kappan, 81(3), 191-198.

Ani Aghababyan, Ph.D.

As a Sr. Data & Learning Scientist at McGraw-Hill, Ani is the lead researcher on the design and the development of an adaptive learning algorithm, while simultaneously evaluating the learning science implications of the product. In her work, Ani uses large-scale educational data to observe and improve student learning trajectories within digital learning solutions. In particular, her research is concentrated on the metacognitive factors of learning that may influence students’ performance and help find optimal learning pathways to better outcomes. As part of her goal to improve student results in McGraw-Hill products, Ani has been publishing the result of her research with her colleagues at partnering Universities.

Dr. Aghababyan is also a lecturer at Northeastern University in their Master of Professional Studies in Analytics program where she teaches statistics & machine learning using R. Additionally, she has been serving as a chair for Doctoral Consortium and Practitioner Tracks for Learning Analytics and Knowledge conference. Ani Aghababyan holds an M.Sc. in Information Systems, an MBA, and a Ph.D. in Instructional Technology & Learning Sciences.

Ryan Baker, Ph.D.

Ryan Baker is Associate Professor at the University of Pennsylvania, and Director of the Penn Center for Learning Analytics. His lab conducts research on engagement and robust learning within online and blended learning, seeking to find actionable indicators that can be used today but which predict future student outcomes. Baker has developed models that can automatically detect student engagement in over a dozen online learning environments, and has led the development of an observational protocol and app for field observation of student engagement that has been used by over 150 researchers in 4 countries. Predictive analytics models he helped develop have been used to benefit hundreds of thousands of students, over a hundred thousand people have taken MOOCs he ran, and he has coordinated longitudinal studies that spanned over a decade.

He was the founding president of the International Educational Data Mining Society, is currently serving as Editor of the journal Computer-Based Learning in Context, is Associate Editor of two journals, was the first technical director of the Pittsburgh Science of Learning Center DataShop, and currently serves as Co-Director of the MOOC Replication Framework (MORF). Baker has co-authored published papers with over 300 colleagues.